- Ophthalmology Times: January/February 2025

- Volume 50

- Issue 1

Ophthalmology sees AI-powered impact on cataract and diabetic eye disease

Key Takeaways

- AI significantly improves cataract surgery outcomes through enhanced diagnosis, IOL calculations, and surgical skill assessment, optimizing clinical workflows and patient care.

- Autonomous AI systems for diabetic retinopathy, like LumineticsCore, demonstrate high diagnostic accuracy, enabling specialty-level diagnostics in primary care settings.

Shameema Sikder, MD; and T. Y. Alvin Liu, MD, highlight the technology’s role in bridging challenges and successes for clinical practice.

The artificial intelligence (AI) technology is developing rapidly and is in the process of transforming clinical practice and how physicians allot their time. Shameema Sikder, MD, and T. Y. Alvin Liu, MD, discussed AI’s real-world impact on the management of

AI’s contributions to the management of cataracts

Tens of thousands of

Diagnosis

Sikder cited results from a recent study3 that found that a deep learning system (DeepLensNet; Deep Lens), could automatically and quantitatively classify cataract severity for the 3 types of age-related cataract. For the nuclear sclerosis and cortical lens opacity types, “the accuracy was significantly superior to that of ophthalmologists; for the least common type, posterior subcapsular cataract, it was similar,” the authors reported.

The authors concluded, “DeepLensNet may have wide potential applications in both clinical and research domains. In the future, such approaches may increase the accessibility of cataract assessment globally.”

A less recent innovation allows the smartphone to test vision effectively. Peek, an app developed by British ophthalmologist Andrew Bastawrous, showed that the Peek test was as accurate as the standard methods to test vision.4

Calculating IOL powers has been a challenge, but the application of AI to optimize existing IOL formulas and develop hybrid formulas based on AI has been successful.5 Results from a 2021 study indicated that the mean absolute error of each IOL formulae with and without AI enhancement was determined, as well as the number of eyes within 0.5 diopter (D) of the predicted refraction.

The authorsfound that “AI algorithms improved the mean absolute error as well as the number of eyes within 0.5 D of the predicted refraction for each of the formulae tested (P < .05).” The study tested the Holladay 1, SRK/T, and Ladas superformulas. “This methodology has the potential to improve clinical outcomes for patients [undergoing] cataract surgery,” they stated.

Intraoperative use of AI

Sikder pointed out that AI can be used for automated situation awareness technologies through tool recognition, anatomic segmentation, and phase/step recognition. A 2019 study6 assessed the automated identification of phases in videos of cataract surgery using AI in 100 cataract procedures.

The authors reported that “modeling time series of labels of instruments in use appeared to yield greater accuracy in classifying the phases of cataract operations than modeling cross-sectional data on instrument labels, spatial video image features, spatiotemporal video image features, or spatiotemporal video image features with appended instrument labels.” The authors believe that “time series modeling of instrument labels and video images using deep learning techniques may yield potentially useful tools for the automated detection of phases in cataract surgery procedures.”

Another factor that AI can assess is surgical skill in the operating room, with the goal of improving patient outcomes and quality of care through surgical training and coaching. They formulated a new method based on attention mechanisms and provided a comprehensive comparative analysis of state-of-the-art methods for video-based assessment of surgical skill in the operating room, Sikder explained.

The study included 99 RGB videos of capsulorhexis by which they evaluated image feature–based methods and 2 deep learning methods to assess skills. In the first method, they predicted the instrument tips as the key points and predicted the surgical skill using temporal convolutional neural networks (CNNs); in the second, they proposed a framewise encoder (2-dimensional CNN) followed by a temporal model (recurrent neural network), both of which are augmented by visual attention mechanisms, the authors explained.

They reported that when classifying a binary skill label (ie, expert vs novice), the range of area under the receiver operating characteristic curve (AUC) estimates was 0.49 to 0.76 for image feature–based methods. The sensitivity and specificity were not consistently high for any method. For the deep learning methods, the AUC was 0.79 using key points alone and 0.78 and 0.75 with and without attention mechanisms, respectively.

The study7 authors concluded, “Deep learning methods are necessary for video-based assessment of surgical skill in the operating room. Attention mechanisms improved the discrimination ability of the network.”

In a recent advance to this research,8 Sikder’s team developed another AI model in which they use the location of a surgical instrument in the video images to supervise the attention mechanisms. The authors concluded that the “explicit supervision of attention learned from images using instrument tip locations can improve performance of algorithms for objective video-based assessment of surgical skill.” In other words, supervising the attention not only improved the AI model but also allowed the model to perform well in videos from new surgeons.

AI in the postoperative period

Ocular images are essential to ophthalmology. Sikder cited a study9 that sought to predict the progression of ophthalmic disease, with the goals of planning treatment strategies and providing patients with early warning regarding disease progression.

The study authors developed an end-to-end temporal sequence network (TempSeq-Net) to automatically predict ophthalmic disease progression, which included using a convolutional neural network (CNN) to extract high-level features from consecutive slit-lamp images and applying a long short-term memory (LSTM) recurrent neural network to mine the temporal relationship of features, they explained. They compared 6 potential combinations of the CNNs and LSTM for effectiveness and efficiency to obtain the optimal TempSeq-Net model and then analyzed the impact of sequence lengths on the model’s performance to determine stability and validity and the appropriate range of sequence lengths.

The quantitative results demonstrated, the authors said, that the proposed model offers exceptional performance with mean accuracy (92.22%), sensitivity (88.55%), specificity (94.31%), and AUC (97.18%). Moreover, the model achieves real-time prediction with only 27.6 milliseconds required for 1 sequence and simultaneously predicts sequence data with lengths of 3 to 5. “Our study provides a promising strategy for the progression of ophthalmic disease and has the potential to be applied in other medical fields,” the authors concluded.

The future

Adopting AI technologies seems to be the challenge of the future, Sikder and colleagues pointed out in an editorial.10 FDA approval does not ensure acceptance by health care decision makers.“Adoption of technologies and their translation into a societal impact require informed consumers who can apply useful information and ignore irrelevant information produced by the technologies,” they commented.

The AI technologies can produce biased predictions, and with increased learning by the algorithms, modifications to the applications may be required. In addition, the algorithms may not be generalizable to all patient populations, so physicians who use AI technologies to care for patients have a critical role in improving the technologies because they are best positioned to observe and report when AI fails. Data on failure modes of AI are crucial to drive fundamental research necessary to make AI accurate and work for all patients.

“We cannot be content with the AI technologies being created for patient care. Doctors who use AI technologies have a responsibility and a unique opportunity to make them work for every patient and promote trust on the technologies,” Sikder commented.

Autonomous AI for diabetic eye disease in the real world

The expanding use of AI across the US and the world comes with questions and concerns. Liu looked at medical AI regulations in the US, the current status of AI for diabetic retinopathy (DR) screening in the US, and successes, challenges, and uncertainties. He is endowed professor and inaugural director of the James P. Gills Jr MD and Heather Gills Artificial Intelligence Innovation Center, cochair of the imaging committee on the Artificial Intelligence and Data Trust Council, and member of the AI Oversight Team at Johns Hopkins Medicine in Baltimore, Maryland, as well as a member of the AI Committee of the American Academy of Ophthalmology.

FDA regulation

Medical devices are classified by the FDA into Class I, II, or III, and the ultimate classification reflects the risk of the device being investigated. Most AI-enabled medical devices are Class II (moderate risk) and were cleared to enter the US marketplace via the De Novo or 510(k) pathways. The De Novo pathway is meant for novel use cases, for which there is no precedent, and is therefore more demanding than 510(k).

As of May 2024, 882 AI-enabled medical devices have been approved, with 9 devices being ophthalmic in nature. There are currently 4 autonomous AI products, which are provided by 3 companies (Digital Diagnostics, Eyenuk, and AEYE Health), that are cleared by the FDA to perform testing for diabetic eye disease.

Liu cited trials that took place along the pathway to approval. The first was a prospective, multisite trial conducted in the US of LumineticsCore (Digital Diagnostics).11 The study included 900 participants with no DR history. The investigators reported, “The AI system exceeded all prespecified superiority end points at a sensitivity of 87.2% (95% CI, 81.8%-91.2%) (> 85%), a specificity of 90.7% (95% CI, 88.3%-92.7%) (> 82.5%), and imageability rate of 96.1% (95% CI, 94.6%-97.3%), demonstrating AI’s ability to bring specialty-level diagnostics to primary care settings.” The system was cleared by the FDA to detect referrable DR (more than mild nonproliferative DR). This system was also the first autonomous AI system approved by the FDA in any medical field.

In terms of real-world implementation of autonomous AI for diabetic eye disease, findings from a study12 that measured adoption of AI devices indicated that they are beginning to come into their own.

The authors reported that most usage is “driven by a handful of leading devices. For example, only AI devices used for assessing coronary artery disease and for diagnosing diabetic retinopathy have accumulated more than 10,000 Current Procedural Terminology [CPT] claims.” They also found that higher-income zip codes in metropolitan areas with academic medical centers were much more likely to have medical AI usage. They cited the need to investigate barriers and incentives to promote equitable access and broader integration of AI technologies in health care.

Successes

Liu cited the update in CPT in 2021 with the addition of the CPT code 92229 (Imaging of retina for detection or monitoring of disease; point-of-care autonomous analysis and report; unilateral or bilateral). Another recognized success was the willingness by most major insurance payers, including the Centers for Medicare & Medicaid Services, to provide national reimbursement coverage for CPT 92229, which ranges from approximately $60 to $120. Finally, professional society endorsements, such as those from the American Diabetes Association and the National Committee for Quality Assurance, were also critical, he said.

Challenges

More work needs to be done. One area noted in a recent study10 was the geographic variations in clinical AI implementation as mentioned, with higher-income zip codes adopting the AI technology more readily.

Another shortfall is nondiagnostic AI results due to insufficient retinal image quality.13Findings froma recent study led by Liu identified factors associated with nondiagnostic results, and these factors could be used to design a novel, predictive dilation workflow where patients most likely to benefit from pharmacologic dilation are given dilation a priori, thus maximizing the number of patients at risk for disease undergoing diabetic retinal examinations.

The final challenge cited by Liu is heterogeneous outcomes after health system–wide deployment of autonomous AI for diabetic eye disease. This challenge was highlighted in findings from another study led by Liu that was conducted at Johns Hopkins Medicine and published in npj Digital Medicine. For example, from 2019 to 2021, the adherence rate for annual DR examinations increased by 12.2% in Black or African American individuals, but over the same period, the adherence rate for Asian American individuals barely changed. Besides challenges related to real-world implementation, other considerations, such as future reimbursement rates for CPT 92229 and the requirements for postmarketing monitoring of AI-enabled medical devices, create additional uncertainties for the evolving landscape of ophthalmic AI products.

Shameema Sikder, MD

Sikder is the L. Douglas Lee and Barbara Levinson-Lee Professor of Ophthalmology and director of the Center of Excellence for Ophthalmic Surgical Education and Training at Johns Hopkins Hospital in Baltimore, Maryland. She has no financial interest in this subject matter.

T. Y. Alvin Liu, MD

Liu is endowed professor and inaugural director of the James P. Gills Jr MD and Heather Gills Artificial Intelligence Innovation Center, cochair of the imaging committee on the Artificial Intelligence and Data Trust Council, and member of the AI Oversight Team at Johns Hopkins Medicine in Baltimore, Maryland, as well as a member of the AI Committee of the American Academy of Ophthalmology. He has no financial interest in this subject matter.

References:

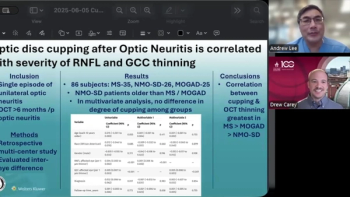

Sikder S. AI evaluation of cataract surgery videos. Presented at: Johns Hopkins Wilmer Eye Institute’s 37th Annual Current Concepts in Ophthalmology; December 5, 2024; Baltimore, MD.

Liu TYA. Autonomous AI for diabetic eye disease in real world setting. Presented at: Johns Hopkins Wilmer Eye Institute’s 37th Annual Current Concepts in Ophthalmology; December 6, 2024; Baltimore, MD.

Keenan TDL, Chen Q, Agrón E, et al; AREDS Deep Learning Research Group. DeepLensNet: deep learning automated diagnosis and quantitative classification of cataract type and severity. Ophthalmology. 2022;129(5):571-584. doi:10.1016/j.ophtha.2021.12.017

Sohn E. Smartphones are so smart they can now test your vision. National Public Radio. May 28, 2015. Accessed January 17, 2025. https://www.npr.org/sections/goatsandsoda/2015/05/28/409731415/smart-phones-are-so-smart-they-can-now-test-your-vision

Ladas J, Ladas D, Lin SR, Devgan U, Siddiqui AA, Jun AS. Improvement of multiple generations of intraocular lens calculation formulae with a novel approach using artificial intelligence. Transl Vis Sci Technol. 2021;10(3):7. doi:

10.1167/tvst.10.3.7 Yu F, Croso GS, Kim TS, et al. Assessment of automated identification of phases in videos of cataract surgery using machine learning and deep learning techniques. JAMA Netw Open. 2019;2(4):e191860. doi:10.1001/jamanetworkopen.2019.1860

Hira S, Singh D, Kim TS, et al. Video-based assessment of intraoperative surgical skill. Int J Comput Assist Radiol Surg. 2022;17(10):1801-1811. doi:10.1007/s11548-022-02681-5

Wan B, Peven M, Hager G, Sikder S, Vedula SS. Spatial-temporal attention for video-based assessment of intraoperative surgical skill. Sci Rep. 2024;14(1):26912. doi:10.1038/s41598-024-77176-1

Jiang J, Liu X, Liu L, et al. Predicting the progression of ophthalmic disease based on slit-lamp images using a deep temporal sequence network. PLoS One. 2018;13(7):e0201142. doi:10.1371/journal.pone.0201142

Vedula SS, Tsou BC, Sikder S. Artificial intelligence in clinical practice is here-now what? JAMA Ophthalmol. 2022;140(4):306-307. doi:10.1001/jamaophthalmol.2022.0040

Abràmoff MD, Lavin PT, Birch M, Shah N, Folk JC. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit Med. 2018;1:39. doi:10.1038/s41746-018-0040-6

Shou BL, Venkatesh K, Chen C, et al. Risk factors for nondiagnostic imaging in a real-world deployment of artificial intelligence diabetic retinal examinations in an integrated healthcare system: maximizing workflow efficiency through predictive dilation. J Diabetes Sci Technol. 2024;18:302-308. doi:10.1177/19322968231201654

Huang JJ, Channa R, Wolf RM, et al. Autonomous artificial intelligence for diabetic eye disease increases access and health equity in underserved populations. NPJ Digit Med. 2024;7(1):196. doi:10.1038/s41746-024-01197-3

Articles in this issue

11 months ago

The human impact of geographic atrophy12 months ago

Low-dose atropine causes esotropia in patient case12 months ago

Shaping the future of retinal therapyabout 1 year ago

Treating systemic inflammation may protect against diabetesNewsletter

Don’t miss out—get Ophthalmology Times updates on the latest clinical advancements and expert interviews, straight to your inbox.